You are about to pay the “AI Tax,” and you won’t even get an AI feature in return.

If you have tried to buy a high-performance laptop in February 2026, you may have noticed something strange. The prices are up, but the specifications are… stagnant. Or worse, they have regressed. The $1,500 “Pro” laptop that came with 32GB of RAM in 2024 now comes with 16GB. The budget phone that should have graduated to 12GB is stuck at 8GB.

Mainstream tech reporting calls this a “supply chain glitch” or a “post-pandemic correction.” They are wrong.

This is a permanent structural shift in how the world allocates silicon. On February 2, 2026, TrendForce reported that DRAM contract prices surged 90-95% in a single quarter. This is not inflation. This is a hostile takeover of the global wafer supply by the Artificial Intelligence sector.

The memory in your laptop is being cannibalized to feed the data centers. And the physics of semiconductor manufacturing guarantees that this shortage will not end soon.

The Zero-Sum Wafer Game

To understand why your laptop is getting worse, you have to look at the silicon wafer.

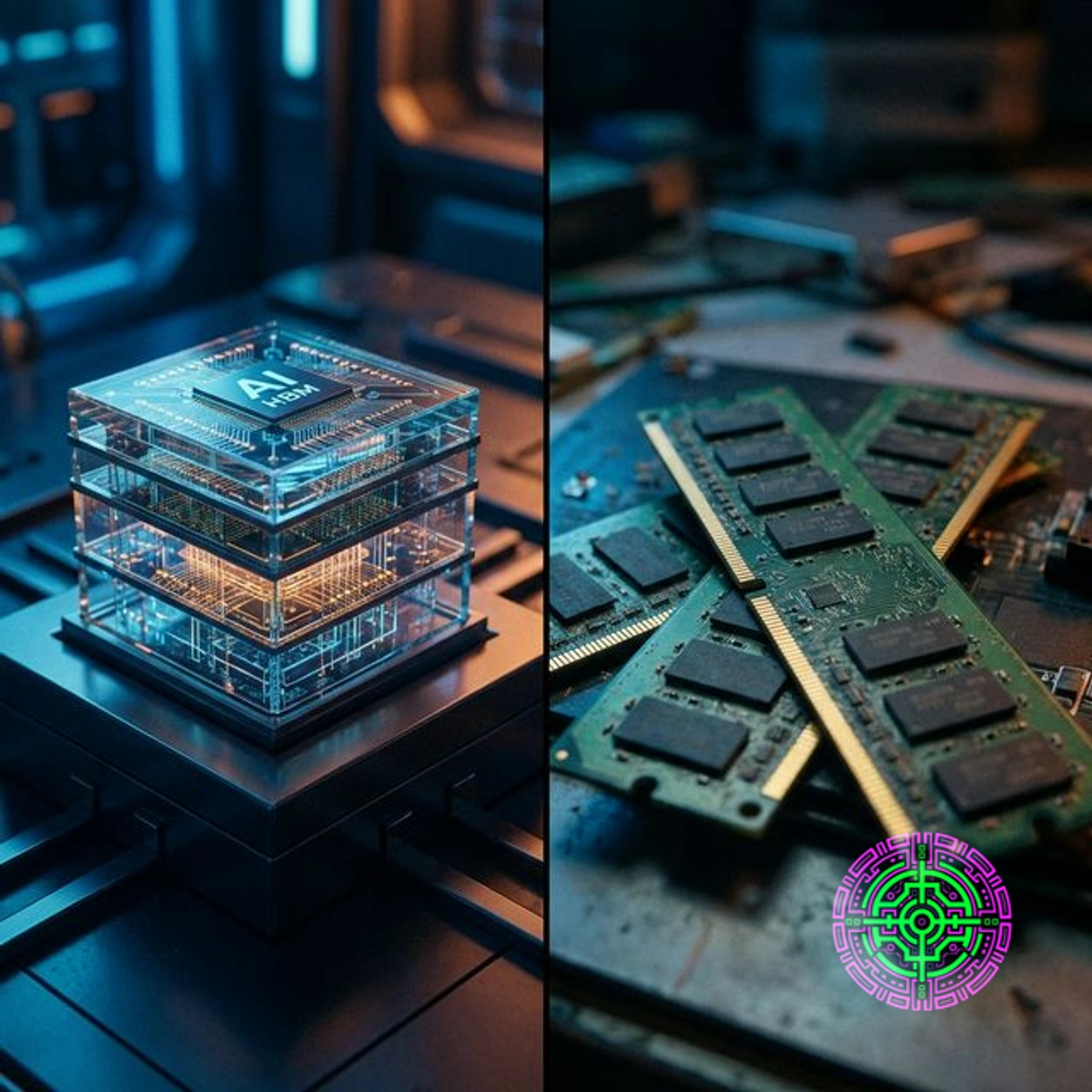

There is a finite amount of clean-room capacity in the world to process silicon wafers. Samsung, SK Hynix, and Micron - the “Big Three” oligopoly controlling 95% of the market - can use that capacity to build two things:

- DDR5: The commodity memory used in your PC, phone, and car.

- HBM (High Bandwidth Memory): The specialized, 3D-stacked memory used in Nvidia H100/B200 GPUs.

For years, this balance was stable. But the AI boom broke the scale. HBM is not just “faster” RAM; it is physically massive.

The 3:1 Penalty

HBM achieves its incredible speed by stacking memory dies vertically and connecting them with thousands of microscopic pillars called Through-Silicon Vias (TSVs). This architecture comes with a massive penalty in manufacturing efficiency.

Because the HBM die is larger (to accommodate the TSV logic) and the yield rates are lower (due to the complexity of stacking), the math is brutal:

For every one wafer of HBM produced, the manufacturer sacrifices the capacity to produce three wafers of standard DDR5. This ratio essentially creates a triple-tax on global supply. When SK Hynix allocates 10,000 wafers a month to Nvidia, the consumer market doesn’t lose 10,000 wafers of DDR5. It loses 30,000.

In 2026, the demand for HBM is effectively infinite. Nvidia, OpenAI, and the hyperscalers have bought out the entire production run of HBM3e and HBM4 for the year. To meet this demand, the Big Three are not just building new factories (which takes 3-4 years); they are converting existing lines.

Every time a fabrication line is converted to HBM, the global supply of consumer DDR5 shrinks disproportionately. The semiconductor industry acts as a massive funnel, and right now, the sheer gravity of AI capital is bending that funnel away from the consumer.

The “Sold Out” Signal

In January 2026, SK Hynix and Micron both confirmed that their entire 2026 HBM supply was sold out. This sounds like good news for their shareholders, but it is a red alert for the consumer electronics market.

When the high-margin product (HBM) is sold out, manufacturers aggressively reallocate every spare millimeter of clean-room space to make more of it. They essentially stop caring about the low-margin DDR5 commodity market.

This has triggered a “spec regression” in consumer tech.

- Smartphones: The promised jump to 16GB standard for flagship Android phones has stalled. OEMs cannot afford the 90% price hike on memory modules without raising handset prices above the psychological $1,000/$1,200 barriers. Mid-range phones, which were trending toward 12GB as a standard in 2025, are being forced back to 8GB to maintain margin neutrality.

- Laptops: The industry is seeing a bifurcation. “AI PCs” come with 32GB/64GB of RAM and a massive price premium ($2,500+). Standard consumer laptops are being squeezed back down to 8GB/16GB configurations to maintain the $999 price point. This creates a “luxury gap” where adequate performance becomes a premium feature.

The Oligopoly’s Choice

Why don’t Samsung and Micron just build more factories? They are. But they are building them for AI.

Samsung is investing $44 billion in its P5 Pyeongtaek fab, but the tooling is heavily weighted toward HBM logic and advanced packaging. Micron’s $24 billion Singapore expansion is similarly targeted.

Crucially, the Big Three have no incentive to flood the market with cheap DDR5. The current situation - where AI giants pay any price for HBM, and desperate PC makers pay +90% for scarce DDR5 - is the most profitable scenario in the history of the memory industry. It is a “Supercycle” fueled by a double shortage.

Analysts have noted that this behavior mirrors the oil industry’s “capital discipline” era. Just as oil majors stopped drilling “drill-baby-drill” style to maximize dividends, memory makers are refusing to overbuild commodity capacity. They have found that shortage is more profitable than abundance.

The Mobile Squeeze: Why Your Phone Feels Slower

The impact reaches beyond just the price tag; it affects the longevity of the device.

Modern operating systems are becoming more memory-hungry. The latest versions of Android and iOS both integrate local neural networks for features like auto-correct, photo processing, and voice assistants. These models reside in RAM.

- The OS Tax: A typical mobile OS in 2026 reserves 4-5GB of RAM just for the system and background AI services.

- The App Gap: If a phone ships with 8GB of RAM, that leaves only 3-4GB for actual apps. This leads to aggressive “app killing,” where the phone closes background apps constantly to save memory.

- The Usage Reality: The result is a device that feels sluggish not because the processor is slow, but because it is constantly reloading apps from storage.

By forcing OEMs to stick with 8GB/12GB limits, the memory shortage ensures that 2026 devices will age faster than their predecessors. It is unintended planned obsolescence.

Spec Regression in the Laptop Market

The regression is most visible in the laptop sector. For the past decade, the “sweet spot” for memory shifted reliably every 3-4 years.

- 2015: 4GB 8GB

- 2019: 8GB 16GB

- 2023: 16GB 32GB (Projected)

That projection failed. In 2026, 16GB is firmly retrenched as the “Standard,” while 8GB persists in the budget tier like a zombie specification that refuses to die.

Dell, HP, and Lenovo face a mathematical wall. With memory component costs doubling, they have two choices: raise the price of the laptop by $100, or cut the memory in half. Market research shows consumers are extremely price-sensitive at the $999 and $1,299 price points. So, the memory gets cut.

The result is a fleet of 2026 laptops that - on paper - often look identical to 2023 models, but cost 20% more when adjusted for inflation and component quality.

The JEDEC Compromise

Deep in the technical weeds, there is another sign of the crisis: the loosening of standards.

JEDEC, the body that sets memory standards, is under pressure to maintain yields. As manufacturers sprint to produce DDR5 chips on increasingly crowded lines, there are reports of “binning flexibility.” Chips that might have been rejected for top-tier timings in 2024 are finding their way into consumer products in 2026 to keep volume flowing.

This doesn’t mean your RAM will crash. It means the “overclocking headroom” and tight latency timings enthusiasts enjoyed are vanishing from the mid-range. You are getting spec-compliant silicon, but nothing more. The “Silicon Lottery” has fewer winners when every golden ticket is sent to a data center.

The Consumer “Spec Cliff”

What does this mean for the buyer in 2026?

- Buy RAM in Q1 2026: If you are planning a PC build or a server upgrade, buy the memory immediately. The spot price (what you pay at retail) typically lags the contract price (what Dell pays Samsung) by 3-4 months. The 95% contract surge reported in February by TrendForce will hit Newegg and Amazon shelves by Q2/Q3. The window to buy at “old” prices is closing rapidly.

- Beware of “Soldered” Downfalls: Laptop manufacturers will increasingly solder RAM to the motherboard to save space and cost. With costs rising, they will offer lower base configurations (e.g., 12GB) with no path to upgrade. Avoid these machines. 16GB is the absolute minimum for modern Windows/macOS workflows; 32GB is the new standard for longevity, even if you have to pay the “AI Tax” to get it.

- The Used Market is Gold: Paradoxically, a high-spec 2024 or 2025 laptop might offer better value than a mid-range 2026 model. The depreciation curve on used hardware has flattened because new hardware prices have spiked. A used MacBook Pro M3 or a ThinkPad X1 Carbon Gen 12 with 32GB of RAM is now an appreciating asset relative to the stripped-down 2026 replacements.

The Future: Jevons Paradox

The “RAMpocalypse” is an economic manifestation of Jevons Paradox: as technology increases the efficiency of a resource (AI compute), the rate of consumption rises so much that it depletes the resource entirely.

AI was supposed to make the economy more efficient. Instead, it is physically eating the silicon that runs personal devices. The data centers require such vast quantities of high-speed memory that they have distorted the gravity of the entire supply chain.

Until the lattice of new fabs comes online in 2028-2029, the consumer electronics market is a secondary priority. The irony is sharp: consumer data fueled the AI revolution, but now consumers are being priced out of the hardware needed to access it. Your laptop is merely collateral damage in the war for intelligence.

🦋 Discussion on Bluesky

Discuss on Bluesky