The consensus in early 2024 was that the “Scaling Laws” would eventually hit a physical and economic wall of diminishing returns, allowing efficient, leaner models to take the crown. DeepSeek, the Hangzhou-based artificial intelligence (AI) powerhouse, became the poster child for this movement. By training its V3 model for a fraction of the cost of GPT-4o, it convinced a generation of investors that software cleverness could bypass the silicon toll booth.

That narrative evolved on January 2, 2026.

While the tech world was focused on the ongoing CES 2026 keynotes and xAI’s massive 20 billion USD Series E funding round, DeepSeek quietly released a technical report for DeepSeek-MHC (Manifold-Constrained Hyper-connections). The report isn’t just another model update. It is a fundamental challenge to the “Compute Moat” theory that defined 2025. It signals that the era of “brute-force” scaling : where the only answer to intelligence was more GPUs : is facing a structural challenge from architectural innovation.

The Analytical Gap: The Capex Trap vs. Architectural Alpha

The mainstream tech press is currently obsessed with the “arms race” between OpenAI, Google, and xAI. In January 2026, Elon Musk confirmed xAI’s intent to build a cluster of one million GPUs. This is the Capex Trap: the belief that the entity with the most substations and the most silicon wins by default. This strategy relies on the assumption that intelligence is a linear function of compute, but the MHC paper suggests that assumption is dangerously oversimplified.

DeepSeek-MHC exposes the gap in this logic. DeepSeek proved in early 2025 that a frontier model could be trained on a cluster of 50,000 Nvidia GPUs for roughly 1.6 billion USD. This was a fraction of the 10 billion USD clusters being commissioned by Western hyperscalers at the time. However, the MHC paper suggests that even that 1.6 billion USD expenditure might soon be considered “brute-force” in the rearview mirror.

The core of the gap lies in the Manifold-Constrained ROI. While Silicon Valley uses capital to buy its way through scaling bottlenecks, DeepSeek is using high-level mathematics to bypass them. As intelligence becomes cheaper to produce via architectural “Alpha,” the massive 100 billion USD data centers planned for 2027 risk becoming the most expensive “stranded assets” in history. These facilities are built for a specific type of workloads that DeepSeek is actively making obsolete. If the workload for a GPT-5 class model can be compressed by 70 percent via MHC, the extra 300,000 GPUs in an xAI cluster become a liability, not an asset.

Technical Deep Dive: Manifold-Constrained Hyper-connections (MHC)

To understand why DeepSeek-MHC is a threat to the GPU-maximalist world, analysts must look at the “Interconnect Bottleneck.” In traditional large models, the data movement between layers often consumes more energy and time than the actual computer operations. As models grow to 600 billion or 1 trillion parameters, the “surface area” for data communication explodes. DeepSeek-MHC introduces a new way to link neural pathways by constraining these “hyper-connections” to a low-dimensional manifold.

In a standard transformer architecture, every neuron in Layer N potentially connects to every neuron in Layer N+1. This is mathematically exhaustive but physically inefficient. Manifold-Constrained Hyper-connections operate on the principle that most of this high-dimensional space is noise. By projecting high-dimensional data into these constrained, low-rank manifolds before communication, DeepSeek reduces the required networking bandwidth for a model by nearly 70 percent.

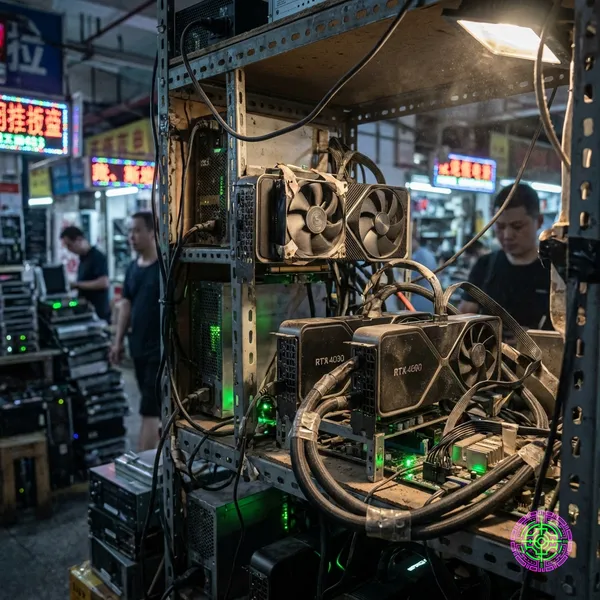

This isn’t just about saving bandwidth; it is about “Unifying the Compute.” This allows DeepSeek to achieve “Frontier Reasoning” on hardware that is technically two generations behind Nvidia’s latest Blackwell ultra-chips. Where a Western model might require the full throughput of an NVLink 5.0 fabric, DeepSeek-MHC can maintain performance on older H800 clusters. This is the true “Sovereign AI” play: engineering around the blockade rather than trying to build a bigger wall.

Furthermore, MHC allows for a higher degree of MoE (Mixture of Experts) granularity. By constraining the manifold, the router can make more precise decisions about which expert to activate without the overhead of massive communication kernels. This leads to a model that is electrically “dark” for 95 percent of its parameters at any given time, yet remains fully responsive.

The Failure of Sovereign AI: The “Compute Ghetto”

Throughout 2025, several nations attempted to build “Sovereign AI” by purchasing small clusters of 5,000 to 10,000 GPUs. In January 2026, these initiatives are facing a crisis of relevance. They have neither the raw scale of xAI nor the architectural “clout” of DeepSeek. They are stuck in the “Compute Ghetto,” where they own enough hardware to be expensive but not enough to be intelligent.

National clusters are often limited by local power grids and a lack of custom software kernels. A cluster of 10,000 GPUs running standard, unoptimized code is effectively a toy compared to the MHC-based clusters in Hangzhou. The result is a performance gap that makes it impossible for national models to keep pace. The “cultural importance” of a sovereign model is quickly outweighed by the fact that the DeepSeek model is 5x faster and can be run on-premises for a fraction of the electricity cost. The dream of “Sovereign AI” was that every nation would have its own refinery; the reality is that they only have access to the raw crude.

A History of Capital Extinction: The Fiber Boom Rhyme

Historical patterns suggest this is a recurring phenomenon. In the late 1990s, the “Fiber Boom” was driven by the belief that internet traffic would double every few months. The industry was right about the technology, but wrong about the economics. Companies like Global Crossing laid millions of miles of fiber that were never fully utilized until a decade later, long after those companies had gone bankrupt.

DeepSeek is repeating this history by forcing a commoditization of intelligence. By proving that architectural efficiency can overcome a 10x disadvantage in hardware scale, they are turning the AI industry’s economics upside down. The “efficiency” they prize is not about saving money; it is about making the capital advantage of their competitors irrelevant. In the same way that a more efficient internal combustion engine eventually made “more cylinders” a niche requirement rather than a standard, MHC is making the “one million GPU cluster” look like a monument to a fading era.

The Forward-Looking Analysis: The 2027 GPU Glut

Industry veterans are now watching the approach of the Inference Cliff. By late 2026, the global capacity to generate high-quality tokens will likely exceed the human capacity to consume them. This will lead to a collapse in the value of “generic” compute. As Blackwell units flood the market, the older H100 and A100 clusters will become “stranded assets.”

The market is likely to see a wave of “AI Cloud” bankruptcies as the ROI on a 20 billion USD investment fails to materialize. The companies that survive will be those that pivot from “building models” to “capturing unique real-world data” or those that follow the DeepSeek path of extreme architectural optimization. The industry is entering the “Efficiency Winter,” where the only way to stay warm is to burn less fuel.

The Physics of Extinction

The DeepSeek-MHC release reveals the state of AI in January 2026. Developers cannot “code” their way out of a capex war, but they can use code to move the goalposts. An engineer may be the smartest person in the room with the most elegant math, but the industry is moving toward a bifurcated future: those who own the grid, and those who own the math.

Sovereign AI is not failing because of a lack of talent. It is failing because the “Minimum Viable Intelligence” now requires a level of vertical integration that most nations cannot achieve. As noted in the analysis of the Sovereign AI Arms Race, compute has become the new oil and the refineries are move-in-ready only for those who understand the chemistry of the fuel. The efficiency myth has been exposed: it was never a way to skip the hardware race; it was the entry fee to stay in the game. Even the breakthroughs of DeepSeek-V3 were merely the prelude to the MHC revolution.

The final verdict is clear: The age of the scrappy underdog is over. The age of the AI Industrial Complex : where math and machines are fused into a singular economic weapon : has begun. If you don’t own the manifold, you don’t own the model.

🦋 Discussion on Bluesky

Discuss on Bluesky