The strategy seemed flawless on paper. In October 2022, and again in late 2023, the US government effectively erased China’s access to the bleeding edge of silicon. By banning the export of Nvidia’s H100 and A100 GPUs, Washington aimed to decapitate Beijing’s artificial intelligence ambitions by starving them of compute.

The theory was simple: Modern AI is a function of brute force. The more FLOPs (Floating Point Operations) a nation possesses, the smarter its models become. Deny the FLOPs, deny the future.

Three years later, that strategy has backfired.

Instead of crippling Chinese AI, the sanctions imposed an evolutionary pressure that Silicon Valley never faced. While OpenAI and Google expanded their infrastructure with 100,000-unit H100 clusters, consuming gigawatts of power to solve problems with raw scale, Chinese labs like DeepSeek were forced to solve them with mathematics.

DeepSeek V4 emerged in early 2026. The system represents a new species of “Lean AI” that uses algorithmic elegance to bypass the hardware blockade entirely, diverging sharply from standard LLMs. In a twist of irony, its efficiency innovations, specifically the “Engram” architecture, promise to perform reasoning tasks on hardware as accessible as a high-end consumer workstation.

The blockade was intended to starve the industry. Instead, it taught engineers how to fast.

The Optimization Trap

To understand why DeepSeek V4 is a paradigm shift, one must understand the “Lazy Era” of Western AI (2023-2025).

In the West, access to compute was effectively infinite. If a model lacked intelligence, the solution was universally to “make it bigger.” This is the Scaling Law dogma: increased parameter count plus increased data equals increased intelligence. It works, but it is inefficient. It is the engineering equivalent of installing a V12 engine in a golf cart to improve acceleration.

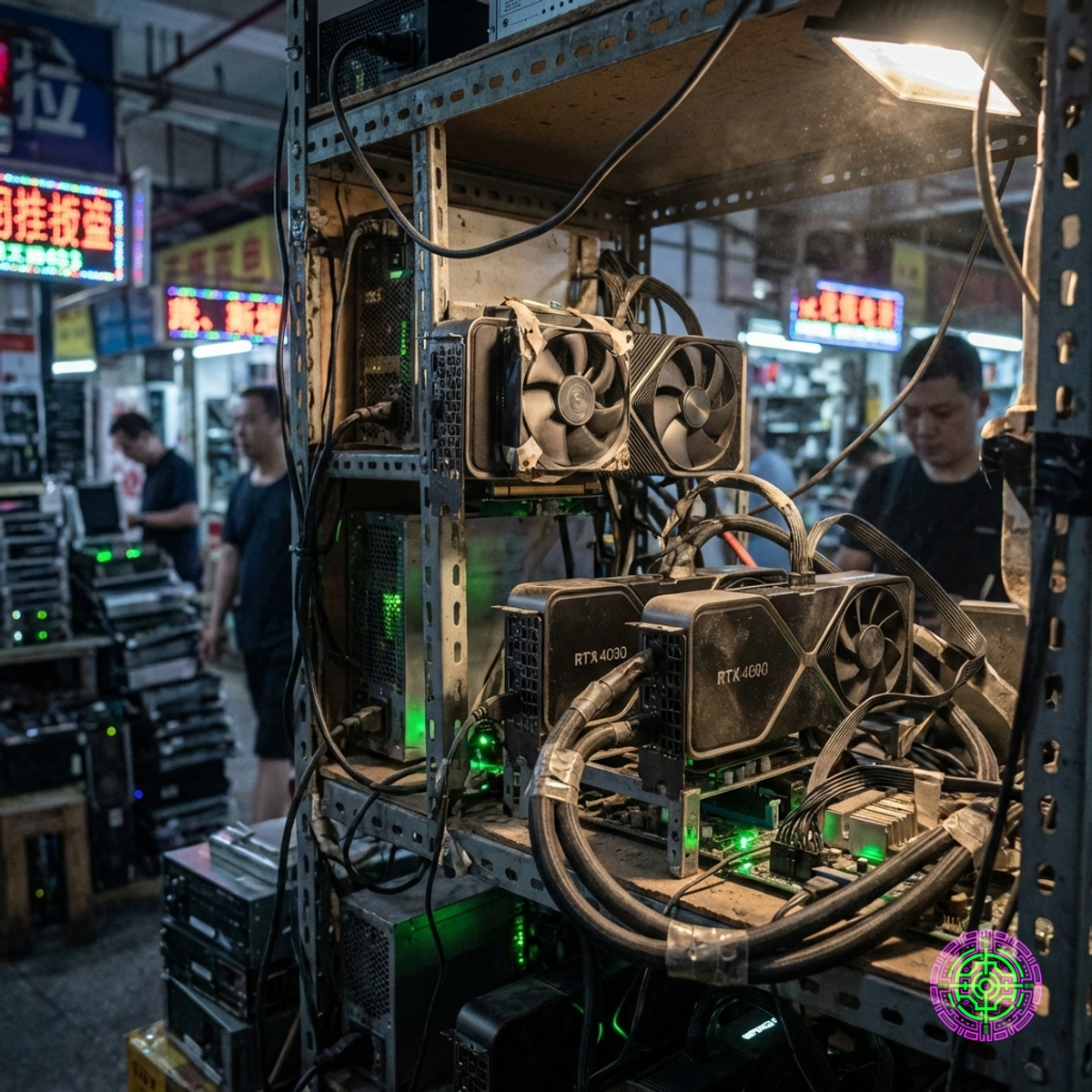

China did not have that luxury. With black market H100s trading for $50,000 to $120,000, double or triple the MSRP, every floating-point operation had to justify its existence. This scarcity forced engineers to investigate the inefficiencies of the Transformer architecture itself.

- Why recalculate the same facts repeatedly?

- Why must every token pay attention to every other token?

- Why are neural networks so unstable that they require massive redundancy?

DeepSeek V4 answers these questions with three specific technologies: Engram, DSA, and mHC.

1. Engram: The O(1) Memory Revolution

The most radical innovation in V4 is the Engram architecture, introduced in a paper released in January 2026.

Standard LLMs suffer from a form of digital amnesia. When asked about the capital of France, GPT-4 “thinks” about it by processing neural weights, effectively re-deriving the answer during every inference pass. This process is computationally expensive.

Engram treats knowledge differently. It separates reasoning (fluid intelligence) from facts (crystallized memory). It offloads static knowledge into a massive, read-only lookup table that resides in system RAM (the standard DDR5 memory on a motherboard), not the scarce GPU VRAM.

In computer science terms, this changes the complexity of knowledge retrieval from a deep neural pass to an O(1) lookup.

By offloading a 100-billion-parameter knowledge table to CPU RAM, DeepSeek V4 operates massive context windows with a fraction of the GPU memory required by Western models. The GPU is freed to focus on reasoning, while the CPU handles rote memorization. This architecture specifically bypasses the VRAM bottleneck of sanctioned cards.

2. DSA: Sparse Attention Physics

The second pillar is DeepSeek Sparse Attention (DSA).

In a standard Transformer, the “Attention Mechanism” is quadratic (). If the length of the input document doubles, the computational work required to process it quadruples. Every word analyzes every other word.

DSA changes this physics. It assumes that for any given word, the vast majority of other words in the document are irrelevant. By implementing a dynamic sparsity mask, DSA reduces the complexity to , where is a small constant of relevant tokens.

In DSA, the matrix is never fully computed. Instead, top-k routers predict which blocks of text are relevant and only compute attention for those. This allows DeepSeek models to handle 128k+ context windows on hardware that would fail to run a standard Llama-3 model of the same size.

3. mHC: Stabilizing the Stack

The final piece of the puzzle, detailed in the Manifold-Constrained Hyper-Connections (mHC) paper (December 2025), solves the stability problem of deep networks.

As models get deeper (more layers), the signal passing through them tends to degrade, either exploding (becoming infinite) or vanishing (becoming zero). Western labs solve this with “Layer Normalization,” a brute-force statistical adjustment at every step.

mHC takes a geometric approach. It forces the connection matrices to stay on a mathematical object called the Birkhoff Polytope (doubly stochastic matrices).

By mathematically guaranteeing that the signal norm is preserved, DeepSeek removes the need for expensive normalization steps. This allows for much deeper, narrower networks that train faster and run with greater stability.

The Darwinian Price Tag

To fully appreciate this architectural divergence, one must look at the economics that drove it.

In Silicon Valley, the cost of a “Bad Idea” is low. If an engineer runs a training run that is 10% inefficient, the cost is absorbed by the massive venture capital subsidies underpinning the industry. Compute is treated like water; it flows freely.

In Shenzhen, the cost of a “Bad Idea” is existential. With H100s costing upwards of $100,000 on the gray market, a 10% inefficiency isn’t a line item; it’s a project-killing expense. This hyper-inflated cost of compute acted as a Darwinian filter. Only the most efficient code survived.

Bloated architectures, lazy memory management, and redundant calculations were selected against with brutal efficiency. The result is a software stack that is leaner, meaner, and far more optimized than its Western counterparts. While US developers were learning to prompt-engineer, Chinese developers were learning to rewrite the fundamental math of the attention mechanism to save a single gigabyte of VRAM.

This economic pressure has created a permanent divergence. Even if sanctions were lifted tomorrow, Chinese labs would not return to the “Western” way of building models. They have found a better way.

The Consumer Hardware Mirage?

There is a rumor circulating on GitHub and HuggingFace that DeepSeek V4 “runs on dual RTX 4090s.” Is this claim accurate?

Partially.

The full 671-billion parameter density of the V3 architecture (which V4 is expected to mirror or exceed) would require nearly 400GB of VRAM to run at a commercially viable speed, which is impossible for consumer cards (a pair of 4090s has 48GB).

However, the Engram architecture attempts to change the equation. Because the bulk of the “knowledge” is offloaded to system RAM (where a user can easily install 256GB of DDR5 for under $1,000), the GPU theoretically only needs to hold the “reasoning core.”

This implies that a researcher in Shenzen, or a hobbyist in Ohio, with a high-end workstation can run a model that behaves like a trillion-parameter giant, albeit with higher latency for factual lookups. It threatens to break the monopoly of the Data Center. Users no longer need an H100 cluster to run SOTA AI; they just need significant amounts of cheap RAM and a smart architecture.

The Auto Industry Parallel

History repeats itself. In the 1970s, the US automotive industry was addicted to displacement. Big V8 engines produced horsepower through sheer volume. When the Oil Crisis hit, Detroit was caught flat-footed.

Japan, resource-constrained and fuel-taxed, had spent decades perfecting the small, efficient inline-four engine. When gas became expensive, the Honda Civic did not just compete with the Ford Mustang; it dominated the market.

US AI is the 1970s V8. It is powerful, loud, and incredibly wasteful. Chinese AI is the 1980s Civic. It is lean, efficient, and increasingly capable of doing the same job for a fraction of the cost.

The US sanctions were intended to act as a blockade. Instead, they functioned as a carbon tax. By making compute expensive, US policy forced a rival to invent the hybrid engine of intelligence. Now, as the electric grid begins to buckle under the power demands of Western data centers, the “Lean AI” born from necessity may prove to be the only architecture that can truly scale.

🦋 Discussion on Bluesky

Discuss on Bluesky