The AI industry has been operating on a single, expensive assumption: Scale is the only moat and compute is the only currency. For two years, the roadmap was clear: spend $100 million, then $1 billion, then $10 billion on H100 clusters to brute-force intelligence.

DeepSeek-V3 just shattered that assumption.

Released quietly by a Chinese research lab, DeepSeek-V3 ostensibly matches GPT-4o and Claude 3.5 Sonnet on critical benchmarks like MATH and LiveCodeBench. But the performance isn’t the headline. The headline is the price tag. DeepSeek claims to have trained this 671-billion parameter monster for roughly $5.6 million in compute credits.

Should this figure prove accurate, the financial logic of the generative AI sector has been upended. The industry is witnessing the “Linux moment” of LLMs—a shift where architectural elegance beats raw brute force and the proprietary moat of Silicon Valley giants begins to evaporate.

The History of the Revolt: From LLaMA to DeepSeek

To understand the gravity of this release, it is necessary to contextualize it within the “Open vs. Closed” war that has raged since 2023.

Phase 1: The Leak (LLaMA-1)

In February 2023, Meta’s LLaMA-1 model was “leaked” onto 4chan. For the first time, researchers could run a GPT-3 class model on their own hardware. It sparked a Cambrian explosion of fine-tuning (Alpaca, Vicuna), proving that smaller, optimized models could punch above their weight. But it was still a toy compared to GPT-4.

Phase 2: The Contenders (Mistral & Llama 3)

Then came Mistral Large and Llama 3. These models closed the gap, offering GPT-3.5 or GPT-4 Turbo levels of performance. They proved that open weights weren’t just for hobbyists, but for enterprise-grade applications. However, they were still trained on massive, traditional clusters, and their training costs remained in the tens of millions.

Phase 3: The Efficiency Shock (DeepSeek-V3)

DeepSeek-V3 marks the third phase. It is not just “good for an open model”; it is claiming state-of-the-art (SOTA) performance across the board. But critically, it achieved this without the infinite budget of Meta or Microsoft. By optimizing the architecture itself, DeepSeek has proven that algorithmic innovation > raw compute scale.

The Efficiency Paradox: Breaking the “Scaling Law”

To understand why DeepSeek-V3 is terrifying to closed-source labs, one must look under the hood. Most LLMs today are dense models or standard Mixture-of-Experts (MoE). They burn VRAM like coal locomotives.

DeepSeek didn’t just train a bigger model; they changed the physics of the engine.

Multi-Head Latent Attention (MLA): The Memory Compressor

The killer feature of DeepSeek-V3 is Multi-Head Latent Attention (MLA). In traditional Transformer models, the Key-Value (KV) cache is a memory vampire. As the context window grows (e.g., 128k tokens), the memory required to store specific “keys” and “values” explodes, forcing researchers to buy more H100s just to serve inference.

MLA compresses this cache. Instead of storing the full Key and Value heads, it projects them into a low-rank latent vector.

By storing a compressed “summary” of the attention data rather than the raw data itself, MLA reduces the KV cache size by nearly 93% compared to standard architectures. This allows DeepSeek-V3 to run on significantly fewer GPUs while maintaining long-context performance. For an industry obsessed with “Token per Second” (TPS) costs, this is a holy grail.

DeepSeekMoE: The Specialist Swarm

DeepSeek also refines the Mixture-of-Experts (MoE) architecture. A standard MoE model routes a query to top-k experts (e.g., the “coding expert” or “history expert”). However, traditional MoE suffers from “expert collapse,” where a few experts get all the work while others sit idle.

DeepSeek-V3 uses a granular approach:

- Fine-Grained Experts: Instead of 8 massive experts, it splits functionality into finer slices (e.g., 64 routed experts).

- Shared Experts: It designates certain experts as “always on” shared resources that capture common knowledge, preventing the “knowledge island” problem where specialized experts lose touch with general grammar or logic.

The result? A model with 671 billion parameters that only activates 37 billion per token. It has the brain size of a giant but the calorie consumption of a toddler.

Auxiliary-Loss-Free Load Balancing

One of the most technical but impactful innovations is strictly in the training stability. Typically, to ensure all “Experts” in an MoE capability are used equally, researchers add an “auxiliary loss” function—a penalty if the model ignores certain experts.

The problem? This penalty hurts the model’s actual performance. It forces the model to use “sub-optimal” experts just to satisfy the quota. DeepSeek-V3 introduces Auxiliary-Loss-Free load balancing. Instead of penalizing the model, they dynamically adjust the “bias” term for each expert. If an expert is overworked, its “cost” to call goes up slightly, naturally encouraging the router to look elsewhere, without degrading the gradient focus.

FP8 Mixed Precision: The Speed Limit Break

The $5.6 million training cost figure relies heavily on their use of FP8 (8-bit Floating Point) precision.

Historically, models are trained in BF16 (16-bit). FP8 reduces the memory footprint and bandwidth requirements by half specifically for matrix multiplications. While NVIDIA’s H100s support FP8, few labs have successfully trained frontier models purely in FP8 due to instability (numerical overflow).

DeepSeek cracked this. By using fine-grained quantization strategies (blocking scaling factors onto small 1x16 tiles), they maintained the numerical precision needed for convergence while reaping the massive speedups of 8-bit math. This ostensibly allowed them to train roughly 40% faster than a comparable BF16 run, directly slashing the rental bill for the GPU cluster.

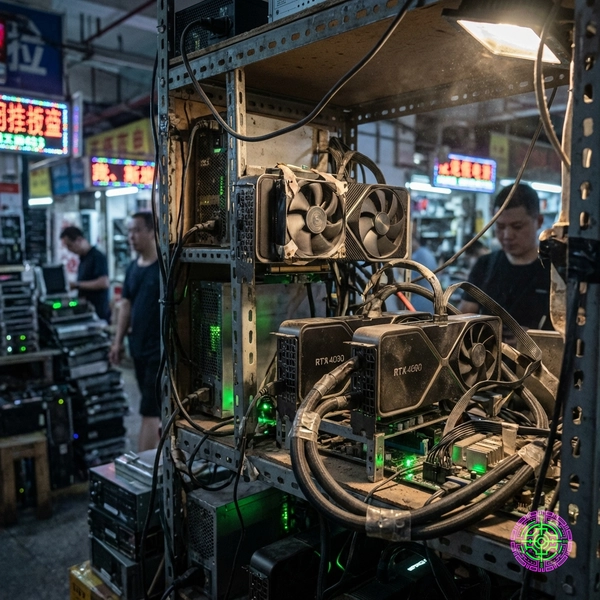

The Geopolitical Glitch

The existence of DeepSeek-V3 is a direct challenge to U.S. export controls. The Biden administration’s October 7 chip bans were designed to freeze China’s AI development at the GPT-3 era by denying them access to NVIDIA’s cutting-edge H100s.

The theory was that without 3.2 terabits per second of interconnect speed, you couldn’t train a frontier model.

DeepSeek circumvented this by optimizing for low-bandwidth communication. By aggressively compressing the data sent between GPUs (using FP8 precision and dual-pipe routing), they managed to train a frontier model on hardware that was supposed to be “obsolete” or technically restricted.

They didn’t break the blockade; they engineered a car that runs on different fuel.

Performance Reality Check

Is it actually as good as GPT-4o? The benchmarks suggest “Yes, mostly.”

Here is how it stacks up on the “Big Three” benchmarks:

| Benchmark | Domain | DeepSeek-V3 | GPT-4o (May 2024) | Claude 3.5 Sonnet |

|---|---|---|---|---|

| MATH | Advanced Mathematics | 90.0% | 76.6% | 71.1% |

| LiveCodeBench | Coding (Hard) | 40.2% | 43.1% | 46.2% |

| MMLU | General Knowledge | 88.5% | 88.7% | 88.3% |

Data sourced from publicly reported Technical Reports and independent eval harnesses.

The “Math” Anomaly

The 90.0% score on MATH is staggering. It suggests DeepSeek has over-indexed on reasoning datasets, likely synthetic data generated by other frontier models (distillation). However, on Coding, it lags slightly behind Claude 3.5 Sonnet, which remains the developer sweetheart.

Nuance & “Vibe”

Qualitative testing shows distinct differences. DeepSeek-V3 is significantly more “robotic” and concise than GPT-4o. It lacks the “creative fluff” that OpenAI has notoriously RLHF’d (Reinforcement Learning from Human Feedback) into their models. For creative writing, GPT-4o still wins. For strictly extracting data from a PDF? DeepSeek might actually be better because it hallucinates less “polite conversation.”

The End of the Rent-Seeker Era?

The industry is moving away from the “Model as a Service” era. If a $5.6 million training run can effectively replicate the output of a $100 million training run, the margins for OpenAI and Anthropic are in danger.

Token Economics

Currently, OpenAI charges roughly $2.50 / 1M input tokens for GPT-4o. DeepSeek API is pricing V3 at roughly $0.14 / 1M input tokens.

That is a 17x price difference.

For startups building RAG (Retrieval Augmented Generation) pipelines, this is not a marginal optimization; it is a business model enabler. Applications that were “too expensive” to build on GPT-4o (e.g., reading entire legal repositories for every query) are suddenly trivial on DeepSeek.

The Forward Outlook: 2026 and Beyond

DeepSeek-V3 proves that you don’t need a trillion dollars to build a god. You just need better math.

Looking toward 2026, the distinguishing factor for AI companies will no longer be “Who has the best model?”—that gap is closing to zero. The distinguishing factor will be integration and trust.

Can a U.S. defense contractor trust a Chinese model with their proprietary codebase? The answer is “No.” However, for a bootstrapped SaaS founder in Berlin, the answer is “Yes, absolutely.”

The moat is gone. The floodgates are open and the water is rising fast.

🦋 Discussion on Bluesky

Discuss on Bluesky