Key Takeaways

- The $100M Illusion: While Western giants like OpenAI and Google spend upwards of $100M per model training run, DeepSeek-V3 was trained for just $5.6M, proving that brute-force compute is a choice, not a requirement.

- Sparse Activation Moat: DeepSeek uses a Mixture-of-Experts (MoE) architecture that only activates 37.3B parameters out of its 671B total per query, achieving a 1/18 efficiency ratio that slashes inference costs.

- RL-First Intelligence: By pioneering reinforcement learning-first training (DeepSeek-R1-Zero), the team demonstrated that reasoning capabilities can emerge without expensive, human-labeled supervised fine-tuning.

- The Efficiency Gap: The “Computing Moat” is rapidly being replaced by an “Efficiency Moat,” as companies like DeepSeek achieve state-of-the-art performance using aging H800 hardware instead of the latest H100/H200 clusters.

The Compute Moat Is Cracking

The narrative is familiar: to build world-class AI, a sovereign-scale data center, tens of billions in capital, and a direct pipeline to NVIDIA hardware are required. In Q4 2025, that narrative hit a solid gold wall. As Microsoft and Google confirmed a combined AI capex commitment exceeding $400B for the coming year, a relatively lean team from China released DeepSeek-R1, a model that matches OpenAI’s o1 in reasoning performance at a fraction of the cost.

This isn’t just a minor optimization; it’s a structural disruption of the AI industry’s economics. For two years, the “Compute Moat” was the dominant theory: the idea that the entity with the most GPUs and the most electricity wins by default. DeepSeek has shattered that theory by proving that algorithmic efficiency can overcome a 10x disadvantage in hardware scale. If a GPT-4 class model can be trained for the price of a mid-sized apartment in Manhattan, the barrier to entry for Silicon Valley incumbents just vanished.

Technical Deep Dive: The Mastery of Sparse Activation

How are 671 billion parameters run on a training budget of $5.6 million? The answer lies in Mixture-of-Experts (MoE) and extreme sparse activation. Traditional “dense” models, like the original GPT-3 or GPT-4o, activate every single parameter for every single token they generate. It’s the computational equivalent of waking up an entire city’s worth of people just to ask one person for directions.

DeepSeek-V3 and R1 utilize a specialized MoE architecture. While the model contains 671 billion parameters in total, it only activates a specific subset—approximately 37.3 billion—for any given token.

The Expert Dispatcher

Think of it like a massive library where, instead of one librarian trying to know everything, hundreds of highly specialized researchers are available. When a question about quantum physics is asked, the “Dispatcher” (the gating network) sends the request only to the physics experts. The math looks like this:

This 1/18 ratio means that DeepSeek is only doing 5.5% of the work that a dense model of equivalent total size would perform. By keeping the vast majority of the model “dark” at any given moment, the system can pack 10x more knowledge into the system while keeping the inference speed and training cost manageable.

Hardware Optimization: Making the H800 Sing

Perhaps the most impressive technical feat is that DeepSeek achieved these results on NVIDIA H800 GPUs: the modified versions of the H100 limited by international export controls. These chips have 50% less interconnect bandwidth (400GB/s vs 900GB/s) than Western counterparts. To overcome this, the DeepSeek team developed a proprietary communication kernel that minimizes data transfer between GPUs, effectively “hiding” the hardware bottleneck through engineering brilliance.

RL-First: Reasoning Without the Human Bill

The second pillar of the efficiency gap is Reinforcement Learning (RL). Traditionally, AI models go through a process called Supervised Fine-Tuning (SFT), where thousands of humans are paid to write “perfect” answers to prompts. This is slow, expensive, and limited by human intelligence.

DeepSeek-R1-Zero took a different path: pure RL. The model was given a set of rules, a goal (solving a math problem), and a “Verifiable Reward” system. If the model got the answer right, it was rewarded. If it failed, it was penalized.

Over thousands of iterations, the model developed its own “Chain of Thought” (CoT) without a single human showing it how to think. It discovered logic, error correction, and even self-doubt entirely through trial and error. This methodology, combined with a “Cold-Start” SFT phase in the final R1 release to improve readability, eliminates the multi-million dollar human-labeling bill that Western companies consider a mandatory cost of business.

Contextual History: The End of the Brute Force Era

To understand why this matters, look back at the “Scaling Laws” identified by researchers in 2020. The assumption was that if data and compute were doubled, intelligence would increase linearly. For five years, the industry followed this roadmap with religious fervor.

- 2021: GPT-3 proves that 175B params is the new floor.

- 2023: GPT-4 pushes the budget into the hundreds of millions.

- 2024: Meta Llama 3.1 trains on 16,000 H100s, costing an estimated $120M.

DeepSeek’s release in late 2025 marks the end of this brute-force era. History shows that whenever a resource becomes prohibitively expensive (compute/GPUs), the market eventually finds a way to substitute it with a cheaper resource: mathematical cleverness. The industry is seeing the “Software-Defined GPU” play out in real-time.

Forward-Looking Analysis: The Capex Trap

The industry is now entering the “Western Capex Trap.” Companies like Microsoft, Amazon, and Google have already committed hundreds of billions of dollars to massive physical data centers based on the assumption that compute is a durable moat.

But what happens to the Return on Investment (ROI) of a $100B cluster if a competitor can achieve the same result using a $10B cluster and better math?

The Margin Compression

As DeepSeek provides API access at 1/30th the price of OpenAI o1, a massive deflationary pressure is hitting the AI market. Enterprises are starting to realize they don’t need to pay an “OpenAI tax” for reasoning tasks. This forces Western providers to choose:

- Cut prices and destroy their own margins to compete.

- Accept market share loss as developers migrate to more efficient open-source or cheaper alternatives.

The Geopolitical Shift

This isn’t just a business problem; it’s a geopolitical reset. Export controls were designed to starve Chinese AI of compute. By forcing Chinese teams to work with inferior hardware, the international community unintentionally incentivized them to become the world’s most efficient algorithmic engineers. The “scarcity mindset” has produced a generation of models that are fundamentally better designed for the real world than the “abundance models” built in Silicon Valley.

What This Means for You

For a developer or a business leader, the “Compute Moat” is no longer a valid reason to ignore AI competition. The barrier to entry for high-reasoning AI has dropped from “Sovereign State” levels to “Mid-Cap Corporate” levels.

If you’re a developer:

- Stop optimizing for the “largest” model and start experimenting with distilled versions of R1.

- Focus on Agentic Workflows where multiple small, efficient experts solve problems together rather than one giant, expensive model.

If you’re an investor:

- Carefully scrutinize the capex-to-intelligence ratio of your AI holdings.

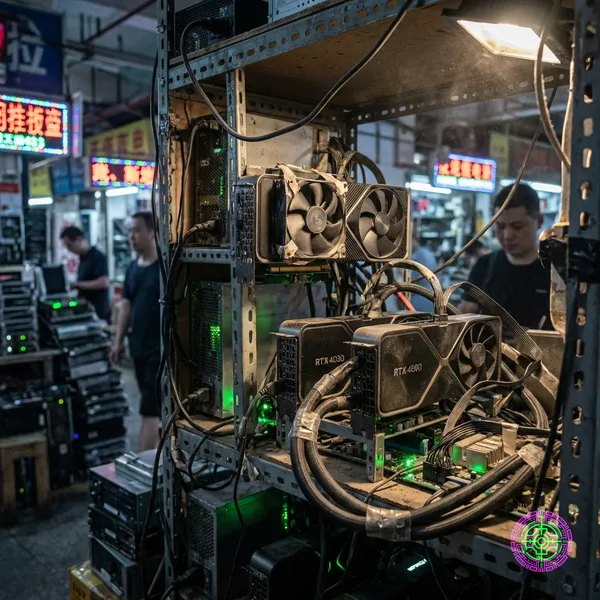

- Ask: “Are they building a moat of silicon, or a moat of math?” Silicon moats can be bypassed as easily as the data center vault in the hero image.

The Verdict: Efficiency Over Brute Force

The release of DeepSeek-R1 in Q4 2025 will be remembered as the moment the AI industry stopped measuring power in Teraflops and started measuring it in efficiency ratios. The Western Capex Trap is a warning to every company currently building a multi-billion dollar compute fortress: the math of the efficiency gap doesn’t care about a balance sheet. In the end, the most intelligent system isn’t the one with the most GPUs—it’s the one that does the most with the least.

🦋 Discussion on Bluesky

Discuss on Bluesky