Key Takeaways

- The Yearly Refresh: NVIDIA has officially abandoned the two-year product cycle, moving to a “Tick-Tock” annual cadence with the Blackwell Ultra (B300) launch.

- Memory is the Moat: The B300’s primary advantage is the transition to 12-hi High Bandwidth Memory 3e (HBM3e), boosting capacity to 288GB per GPU.

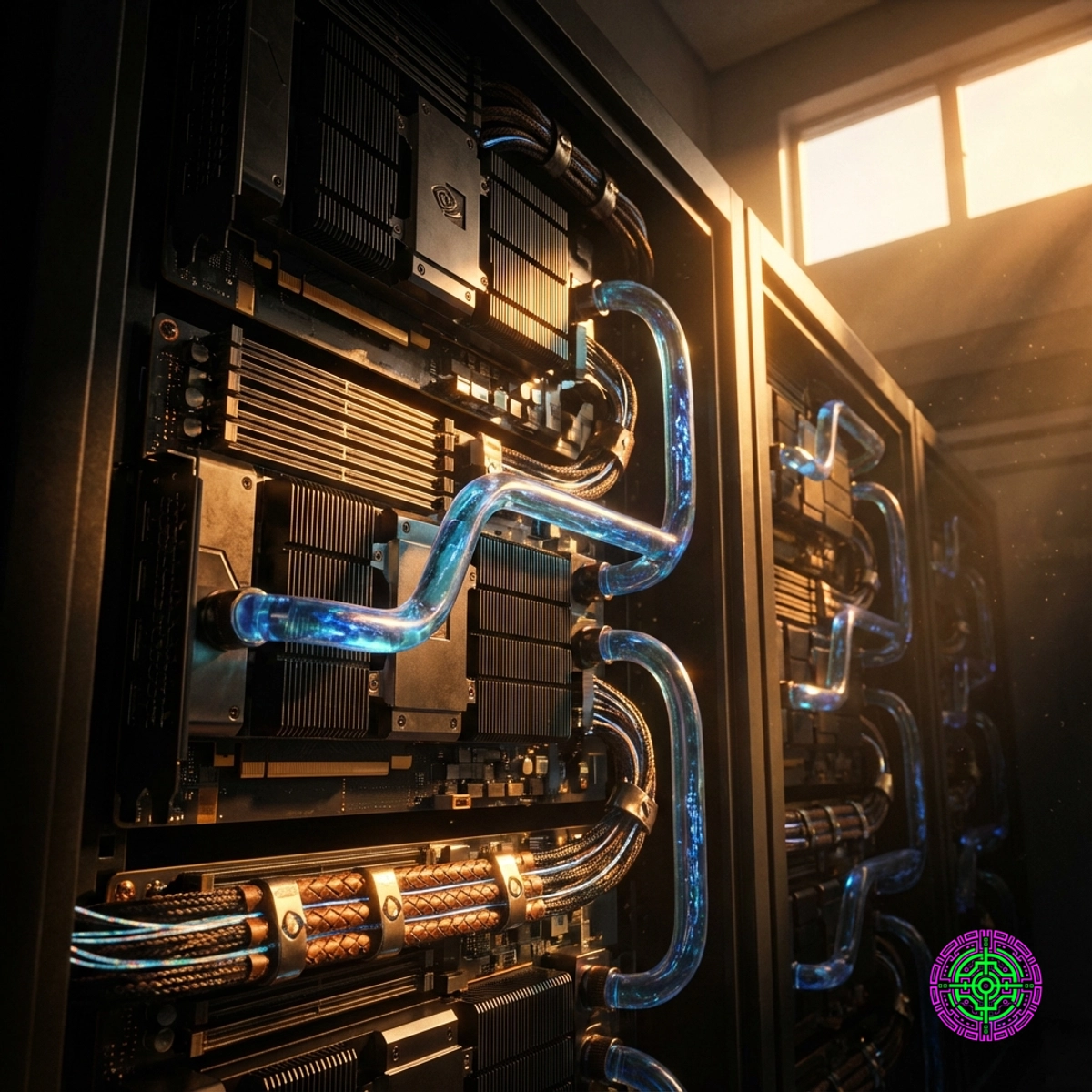

- Power vs. Performance: With a 1,400-watt Thermal Design Power (TDP), the B300 makes liquid cooling a mandatory infrastructure requirement rather than an optional luxury.

- The CapEx Trap: Hyperscalers are forced to invest billions in “Ultra” refreshes mid-deployment, or risk falling behind in the race for “Reasoning” model dominance.

The End of the Two-Year Cycle

In the history of the semiconductor industry, the “Tick-Tock” model was famously championed by Intel to maintain dominance in the CPU market. One year brought a new architecture (Tick), and the next brought a process refinement (Tock). For years, NVIDIA operated on a more relaxed two-year cadence: Pascal (2016), Volta (2017/18), Ampere (2020), Hopper (2022), and finally Blackwell (2024).

But the AI gold rush has changed the physics of the market. On December 4, 2025, Supermicro began shipping the first high-volume liquid-cooled HGX B300 systems, signaling that the “Ultra” era is officially here. This isn’t just a minor patch; it is a USD 100 billion mid-cycle refresh that forces every cloud provider on the planet to rethink their capital expenditure (CapEx) for 2026.

NVIDIA isn’t just selling chips anymore; they are selling a permanent upgrade subscription. If a company bought standard Blackwell (B200) six months ago, they are already behind. The Blackwell Ultra (B300) represents a strategic maneuver to deny competitors any “breathing room” before the next-generation “Rubin” architecture arrives in 2026.

The 12-Hi Memory Wall

The bottleneck for modern generative AI systems (LLMs) is rarely raw compute power. Instead, it is the ability to feed that compute with data, a process governed by High Bandwidth Memory (HBM).

The HBM3e 12-Hi Transition

Standard Blackwell cards used 8-hi HBM3e stacks. These are essentially 8-story buildings of memory chips stacked atop one another. The Blackwell Ultra moves to 12-hi HBM3e. By increasing the stack height, NVIDIA increased the memory capacity per GPU from 192GB to 288GB.

This 50% jump in memory is critical for:

- Model Residency: Complex “Reasoning” models, like OpenAI’s o1, require massive amounts of “active” memory to hold intermediate logical steps.

- Context Windows: Larger memory allows for longer context windows without requiring the model to “swap” data to slower storage, which causes latency.

- KV Cache: The Key-Value (KV) cache grows with the number of concurrent users. More memory equals higher throughput per GPU.

The Power Penalty

Excellence comes at a cost, measured in Megawatts (MW). The B300 pushes the Thermal Design Power (TDP)—the amount of heat a chip generates—to 1,400 Watts. For comparison, the H100 sat at 700 Watts. NVIDIA has effectively doubled the power density in just three years.

This shift makes traditional air-cooled data centers obsolete for top-tier AI training. Liquid cooling is no longer a niche enthusiast setup. The Transformer Crisis confirms the physical grid cannot support these loads without massive efficiency gains in cooling and power delivery.

Background: The Silicon Tick-Tock

The evolution from Hopper to Blackwell was the “Tick” (new architecture). The B300 is the “Tock” (refinement). By shortening this cycle, NVIDIA is executing a classic “encirclement” strategy.

In 2023, the H100 was the only game in town. In 2024, competitors like AMD (MI325X) and AWS (Trainium2) began to close the gap on memory capacity. By launching the B300 “Ultra” just as those competitors hit the market, NVIDIA effectively moved the goalposts again.

This strategy relies on CoWoS-L (Chip-on-Wafer-on-Substrate with Local Interconnect) packaging technology from TSMC. CoWoS-L is the “glue” that allows NVIDIA to connect multiple GPU dies and HBM stacks into a single massive package. By pre-booking the vast majority of TSMC’s CoWoS capacity for 2026, NVIDIA is winning on supply chain denial.

The Silicon Moat: NVLink 130 TB/s

The real magic of the B300 is not the single chip; it is the network. The NVLink interconnect in the B300 NVL72 rack configuration provides 130 Terabytes per second (TB/s) of aggregate bandwidth.

To visualize this: 130 TB/s is enough to transfer the entire contents of the Library of Congress in less than a second. This allows 72 GPUs to act as a single, giant, unified GPU. This “unified memory” architecture is what differentiates NVIDIA from every other player. While competitors make massive infrastructure bets on proprietary silicon, they still struggle to match the low-latency communications of NVIDIA’s NVLink fabric.

The Data: The “Ultra” ROI

The financial implications of the B300 are massive.

Key Statistics:

- Memory Density: 288GB per GPU (50% increase over B200).

- Power Usage: 1.4kW per GPU (40% increase over B200).

- Interconnect Bandwidth: 130 TB/s in NVL72 configuration.

- Revenue Growth: NVIDIA’s data center revenue is projected to exceed USD 150 billion in FY2026, driven largely by the transition to liquid-cooled racks.

Industry Impact

Impact on Hyperscalers (Microsoft, Google, Meta)

For the “Big Three” cloud providers, the B300 is a double-edged sword. On one hand, it provides the performance needed to host the next generation of AI services. On the other, it accelerates the depreciation of existing H100 and B100 fleets. If a provider signed a three-year lease on an H100 cluster in 2024, that hardware is already two generations behind by early 2026.

Impact on the Energy Grid

The move to 1.4kW chips is a challenge for utility planners. A single NVIDIA NVL72 rack now pulls 120kW+. A standard row of 10 racks requires over a Megawatt of power. This is why companies like Microsoft and Amazon are making aggressive moves to secure nuclear power; the grid simply wasn’t built for the density of the Blackwell Ultra.

Impact on AI Research

The B300 enables a shift from “Training” to “Inference-Time Scaling.” Models like o1 use “Chain of Thought” reasoning, which means they spend more time “thinking” before they answer. This requires the massive memory buffers provided by the B300. In short: the B300 is the hardware foundation for the reasoning era of AI.

Challenges & Limitations

- The Energy Wall: Many existing data centers cannot be retrofitted for 120kW racks. The lack of “liquid-ready” real estate is the primary bottleneck for B300 deployment.

- HBM Supply: While 12-hi HBM3e is technically possible, the yields are lower than 8-hi. Any manufacturing difficulty at SK Hynix or Samsung could delay the entire B300 rollout.

- The ROI Question: At what point does the cost of the hardware exceed the value of the intelligence generated? Hyperscalers face intense pressure to prove that USD 100 billion in GPU spend translates to real profit, not just “token” revenue.

What’s Next?

Short-Term (2026)

The transition to the “Rubin” architecture will begin. Rubin will likely move to HBM4, which uses a wider 2048-bit interface. The B300 is the bridge to that future, ensuring that NVIDIA maintains its 90%+ market share during the transition.

Medium-Term (2027-2029)

The industry expects a “System-on-Package” (SoP) revolution. Instead of GPUs sitting on boards, entire servers may be integrated into a single 3D-stacked silicon cube. Power requirements will likely hit 2kW+ per unit.

Long-Term (2030+)

The limitation will no longer be transistors, but the speed of light. Optical interconnects will likely replace copper entirely, moving data between GPUs at the speed of photons to overcome the “Context Wall.”

What This Means for Industry Stakeholders

The Blackwell Ultra launch proves that the AI cycle is compressing, not slowing down.

For IT Decision Makers:

- Avoid building air-cooled data centers. They are legacy infrastructure the moment the concrete dries.

- Budget for 18-month hardware refresh cycles, rather than the traditional 3-5 years.

For AI Developers:

- Design for models that scale with memory capacity. The reasoning model trend is the direct beneficiary of the B300’s 288GB memory pool.

🦋 Discussion on Bluesky

Discuss on Bluesky