The most dangerous deception in artificial intelligence is the belief that “scale is all you need.”

For the past three years, the consensus assumption has been that if model labs simply feed architectures more text and exponentially more compute, the systems will eventually master the laws of physics and logic. The hypothesis was simple: reasoning is just a byproduct of language complexity.

That hypothesis is faltering.

While GPT-4 and its successors can compose Shakespearean sonnets about toaster ovens, they still struggle with basic multi-step logic puzzles that a fifth grader would solve in seconds. They hallucinate citations, confidently fail arithmetic, and cannot be trusted to execute autonomous workflows without human supervision.

Nobel laureate Daniel Kahneman defined two cognitive modes. The first is rapid and instinctive. The second is slow and logical. Contemporary generative models rely exclusively on the first mode. They are probabilistic pattern matchers, predicting the next word based on statistical likelihood rather than verification.

To build agents that can perform actual work, such as managing bank accounts, designing infrastructure, or diagnosing diseases, the industry needs to unlock the second mode: slow, deliberative, logical reasoning.

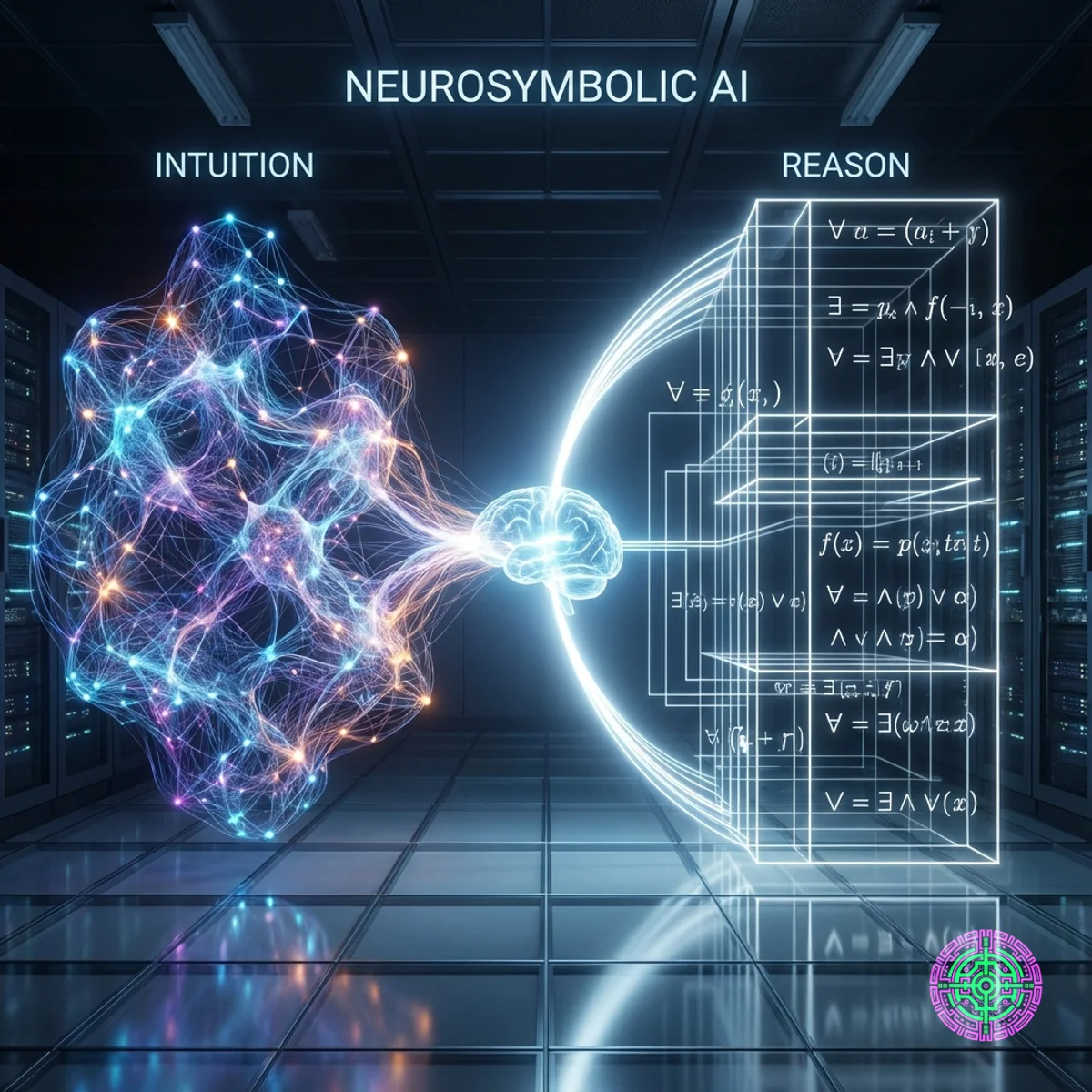

Enter Neurosymbolic AI.

The Broken Promise of Pure Neural Networks

To understand why this shift is happening, it is necessary to look at the failures of the current paradigm.

The “Strawberry” Problem

Ask a standard LLM to count the number of ‘r’s in the word “strawberry.” For a human, this is trivial. For an LLM, it is surprisingly difficult. This is not because the model is “stupid,” but because of tokenization. The model does not see the letters ‘s-t-r-a-w…’; it sees a single integer token representing the concept “strawberry.” It literally cannot “see” the letters inside the token without breaking it down, which requires a logical step it acts fundamentally incapable of performing reliably through probability alone.

The Math Hallucination

When an LLM solves a math problem like 345 x 921, it does not perform multiplication. It recalls similar patterns from its training data. If the specific numbers are rare or unique, the model guesses the output based on which digits “look” right next to each other. This is why models often get the first and last digits correct (high probability) but mangle the middle digits.

This probabilistic fuzziness is acceptable for writing poetry. It is catastrophic for structural engineering.

The Architecture of Reason

Neurosymbolic AI is not a single model. It is a hybrid architecture. It acknowledges that neural networks and symbolic logic are optimized for opposite tasks, and it ceases the attempt to force one to perform the duties of the other.

- The Neural Layer (Intuition): This component handles “messy” data. It processes pixel values in an image, the ambiguity of human speech, or the “vibe” of a document. It translates the chaotic real world into structured representations.

- The Symbolic Layer (Logic): This is the domain of “Good Old-Fashioned AI” (GOFAI). It deals with hard rules, algebra, formal logic, and knowledge graphs. It does not guess. It calculates.

- The Bridge: The breakthrough lies in the bidirectional communication between these layers. The neural net parses the problem, and the symbolic engine proves the answer.

How It Works in Practice

Consider a physics problem involving projectile motion.

A pure LLM (System 1) looks at its training data for similar word problems. It predicts the answer based on probability. If the variables are unique, it often hallucinates a plausible-sounding but incorrect result.

A Neurosymbolic system operates differently:

- Neural Perception: The LLM reads the text and parses the semantic meaning into a formal representation (e.g., variables

v = 60,d = 300,a = 9.8). - Symbolic Execution: It passes these variables to a symbolic solver (such as a Python calculator or a formal theorem prover). This solver applies the rigid law

d = vt + 0.5at^2. - Neural Translation: The solver returns the exact numeric result to the neural component, which then wraps it in a natural language sentence for the user.

The system never “does the math.” It acts as a translator between the messy human world and the rigid logic world.

The History of the Feud

This approach ends a fifty-year civil war in computer science.

- The Symbolists (1950s-1990s): They believed intelligence was clear rule manipulation. They built Expert Systems (like IBM’s Deep Blue). These systems were perfectly logical but brittle. If a rule wasn’t explicitly programmed, the system crashed. They couldn’t handle the messiness of the real world (e.g., recognizing a cat).

- The Connectionists (2010s-Present): They believed intelligence emerged from massive networks of simple neurons (Deep Learning). This approach won the last decade because it could handle messy data. However, it sacrificed explainability and logic for performance.

Neurosymbolic AI is the peace treaty. It admits that the Symbolists were right about reasoning, and the Connectionists were right about learning.

The Proof: AlphaGeometry and AlphaProof

This trajectory isn’t theoretically speculative. Google DeepMind has proven it works at the highest level of competition.

In 2024, DeepMind released AlphaGeometry, a neurosymbolic system that solved International Mathematical Olympiad (IMO) geometry problems at the level of a gold medalist.

This system utilized a unique hybrid approach:

- A Neural Generator creates “constructs” (like adding a new line to a triangle to help solve a proof). This serves as the “intuition,” suggesting where the solution might be found.

- A Symbolic Engine then attempts to rigorously prove the statement using formal logic.

If the symbolic engine gets stuck, the neural model serves up a new “idea” (an auxiliary construction). They loop back and forth—intuition guiding logic, logic verifying intuition—until the proof is complete.

AlphaProof and the “Lean” Language

In late 2025, AlphaProof took this further. It integrates reinforcement learning with Lean, a formal programming language used for mathematical verification.

Unlike Python, where code can run but still be logically flawed, Lean is a “proof assistant.” It mathematically validates every line of code. If the logic is unsound, the code does not compile. By forcing the AI to output its reasoning in Lean, DeepMind essentially created a hallucination-proof barrier. The model can try a thousand wrong ideas, but the user only sees the one that mathematically compiles.

The Enterprise Gap: Why Banks Hate Chatbots

The shift to Neurosymbolic AI is the prerequisite for the “Agentic Era” in enterprise.

Currently, corporate adoption is stalled by the “Trust Gap.” No CFO will authorize an autonomous supply chain agent that probably orders the right amount of steel. They need an agent that provably orders the amount derived from the inventory database.

Neurosymbolic systems offer three critical upgrades for business:

- Verifiability: Because the logic occurs in a symbolic layer, auditors can inspect the “thought process.” They can see exactly which rule was applied to deny a loan or approve a transaction.

- Data Efficiency: A neural net needs to see millions of examples of addition to “learn” it. A symbolic system only needs the rule of addition once. This drastically reduces the data requirements for specialized domain tasks.

- Generalization: A neural net struggles with “out of distribution” data (e.g., numbers larger than it has seen in training). A symbolic logic rule works for

x=1andx=1,000,000,000with equal precision.

The Spectrum of Hybrid AI

It is important to note that this is not a binary switch. Researchers identify a spectrum of Neurosymbolic integration:

- Type 1 (Standard): Neural net with symbolic post-processing (e.g., ChatGPT writing Python code to solve math).

- Type 2 (Deep): Symbolic rules are embedded into the loss function of the neural net during training. The model is “penalized” for violating logic, even if the text looks fluent.

- Type 3 (Fully Integrated): Systems like AlphaGeometry where the two components operate in a tight, iterative loop, creating a “reasoning engine” that is greater than the sum of its parts.

The Road Ahead

The era of “Pure LLMs” is drawing to a close. The next generation of frontier models (GPT-5, Gemini 3) will likely be neurosymbolic under the hood, hiding the symbolic solvers behind a chat interface.

The “Magic” of AI is fading, and that is a positive development. The industry is trading the magic of a poetry-writing chatbot for the reliability of an engineering-grade tool. It is less exciting at parties, but it is infinitely more valuable for civilization.

The industry is finally giving digital brains a prefrontal cortex.

🦋 Discussion on Bluesky

Discuss on Bluesky