The “Walled Garden” has officially fallen. For a decade, Google’s Tensor Processing Units (TPUs) were the forbidden fruit of the AI world. You could rent them inside Google Cloud, observe their power from a distance as they trained AlphaGo and Gemini, but you could never own them. You certainly couldn’t install them in your own data center.

That rule just broke.

In a move that signals a structural shift in the trillion-dollar AI market, emerging reports indicate Google is finalizing deals to rent and eventually sell TPUs directly to Meta and potentially Apple. This is not just a supplier agreement; it is the first real crack in Nvidia’s armor and a desperate, brilliant move by Google to save its own software ecosystem.

If you are holding Nvidia stock or building an AI infrastructure strategy, you need to understand the physics, the economics, and the software wars that drove this decision.

The News: Strange Bedfellows

The strategic alignment is clear. Meta (Facebook) has been the world’s most voracious consumer of Nvidia H100s, accumulating over 600,000 of them. But Mark Zuckerberg has been open about his desire to break free from the “Nvidia Tax”:the premium paid for CUDA lock-in and high-margin hardware.

According to deep industry whispers and reports:

- The Deal: Meta will gain access to “bare metal” TPU instances (likely Trillium v6) initially hosted by Google but partitioned completely from Google’s public cloud.

- The Roadmap: By 2027, the framework allows for Meta to purchase TPU pods outright for installation in their own non-Google data centers.

- The Apple Factor: Similar discussions are occurring with Apple, who needs massive compute for “Apple Intelligence” but is famously allergic to relying on competitors like Microsoft or paying Nvidia’s margins.

This changes the supply/demand equation overnight. If Meta stops buying 20% of Nvidia’s supply because they switched to TPUs, the scarcity that supports Nvidia’s pricing power evaporates.

Technical Deep Dive: The Physics of Light vs. Copper

Why would Meta want a TPU when the Nvidia Blackwell B200 is the “most powerful chip in the world”? The answer isn’t in the chip itself:it’s in the wires. Or rather, the lack of them.

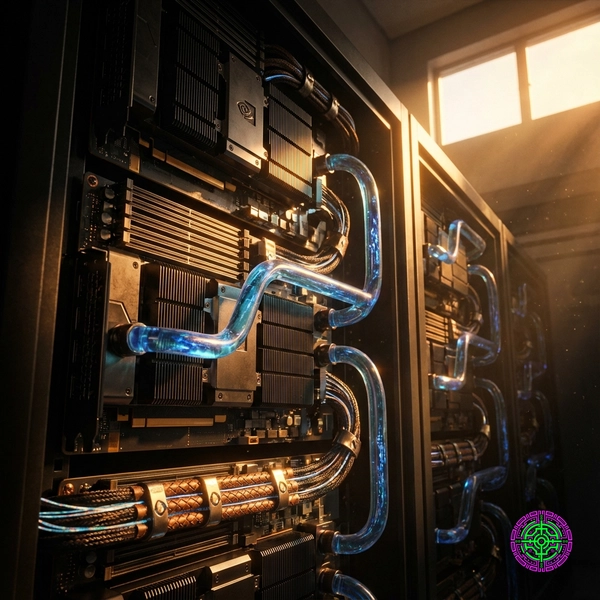

The OCS Advantage (Optical Circuit Switching)

Nvidia’s SuperPODs rely on InfiniBand, a wildly fast but traditional electrical switching network. To connect 100,000 GPUs, you need miles of copper and active electrical switches that constantly convert electrons to photons and back.

Google’s secret weapon, deployed since TPU v4, is Palomar (and now Jupiter), based on Optical Circuit Switches (OCS).

Instead of digital packet switching:where a router reads a packet, buffers it, decides where it goes, and re-transmits it:Google uses MEMS (Micro-Electro-Mechanical Systems). These are tiny, microscopic mirrors that physically rotate to bounce a beam of light from one server to another.

The Physics Wins:

- Speed of Light: There is zero optical-to-electrical conversion. The light just bounces.

- Power Zero: A mirror requires zero power to hold its position. It only consumes power when it moves (reconfigures). An electrical switch consumes massive wattage 24/7 just to keep the ports active.

- Topology Agnostic: Google can physically re-wire the network in milliseconds. If a “Torus” shape is better for Training Job A, and a “Dragonfly” shape is better for Inference Job B, the mirrors just tilt, and the supercomputer is physically re-wired.

The Efficiency Math

While Nvidia chases raw FLOPs, Google chases Performance/TCO. The Trillium (v6) TPU claims a 67% energy efficiency improvement over the v5e, largely due to this interconnect efficiency.

Let’s look at the theoretical cooling math. Electrical switches generate heat. That heat must be cooled. OCS switches generate almost no heat.

In an Nvidia cluster, Networking Power can be 15-20% of the total cluster draw. In a TPU pod, OCS reduces that networking power draw by nearly 95%. In a 100MW data center, that efficiency delta means you can fit 30% more compute into the same power envelope. For Meta, training Llama 5, that is worth billions.

The Software War: JAX vs. CUDA

This is the part Wall Street misses. Google isn’t just selling chips because it wants hardware revenue. It’s selling chips to save JAX.

The CUDA Moat

Nvidia’s monopoly isn’t hardware; it’s software. Everyone codes in CUDA (via PyTorch). Because everyone uses CUDA, everyone buys Nvidia. It’s a self-reinforcing loop.

Google’s JAX

Google’s internal language, JAX, is arguably superior for advanced physics and heavy math. It optimizes automatically for the XLA (Accelerated Linear Algebra) compiler. But outside of DeepMind and specialized researchers, adoption is low.

By putting TPUs into Meta’s hands, Google is forcing Meta’s engineers:the best in the world outside of Google:to optimize PyTorch for XLA.

- If PyTorch runs perfectly on TPUs (via XLA), the “CUDA Moat” fills with sand.

- Suddenlly, AMD chips (which also run XLA/ROCm) becomes viable.

- Intel Gaudi becomes viable.

- The entire ecosystem de-couples from Nvidia.

Contextual History: A Decade of Secrets

Google invented the Transformer architecture (the “T” in GPT) in 2017. They built the first TPU in 2015. They were years ahead. Why did they lose?

- 2016 (TPU v1): Built purely for inference (running AlphaGo).

- 2018 (TPU v2/v3): The training beasts. Liquid cooled. But Google kept them locked in Cloud. “If one wants the chip, one must use the cloud.”

- 2020-2023 (The Hubris): Google assumed their hardware advantage was infinite. They didn’t sell the chips.

- 2024 (The Panic): Microsoft and OpenAI seized the lead using Nvidia GPUs. AWS built Trainium.

- 2025 (The Pivot): Google realizes that “Cloud Exclusivity” is a death sentence. Developers go where the chips are. If the chips aren’t everywhere, the developers leave.

This sale is an admission of failure in strategy, but a stroke of genius in recovery.

Financial Analysis: The “Nvidia Tax” Calculation

Let’s run the numbers on why Meta is taking this deal.

The Nvidia Markup:

- H100 Manufacturing Cost (TSMC + CoWoS + HBM): ~$3,000 - $4,000

- H100 Sale Price: ~$25,000 - $30,000

- Margin: ~85%

The TPU Economics: Google designs the TPU in-house and uses Broadcom for backend design. They don’t need to make an 85% margin on Meta. They can sell the TPU for $15,000, make a healthy 50% profit, and still offer Meta a 50% discount relative to Nvidia.

For a cluster of 100,000 chips:

- Nvidia Cost: $3 Billion

- TPU Cost: $1.5 Billion

Savings: $1.5 Billion. That pays for the entire cooling infrastructure of the data center.

Forward-Looking Analysis: The Chip Alliance

This move effectively creates an “Anti-Nvidia Alliance.”

- Google: Results in hardware revenue + saves JAX relevance + hurts their biggest rival (Nvidia).

- Meta/Apple: Gets traction to negotiate lower prices with Nvidia and diversifies their supply chain efficiency.

- Amazon: Already has Trainium, now sees validation of the non-Nvidia path.

The Market Impact: The industry is moving from a Monopoly (Nvidia has 90% share) to an Oligopoly (Nvidia, Google, AWS, AMD).

- Short Term (1-2 years): Nvidia remains king. The supply backlog is too long, and software takes time to port.

- Medium Term (3-5 years): Margins compress. This specific news is the signal that the 80% gross margins Nvidia enjoys are likely peaking.

Verdict: The hardware is real. The physics (OCS) are superior for specific workloads. If Google can deliver the supply, the “Code Red” is no longer just for Google—it’s for Jensen Huang.

🦋 Discussion on Bluesky

Discuss on Bluesky