The Hook: AI’s Insatiable Hunger

Artificial Intelligence is eating the world’s electricity. A single ChatGPT query consumes nearly 10 times as much electricity as a standard Google search. As models grow larger and more capable, their thirst for power—and the water needed to cool the massive data centers that house them—is becoming unsustainable.

We are running out of grid capacity. We are running out of water for cooling. But what if the solution wasn’t on Earth at all?

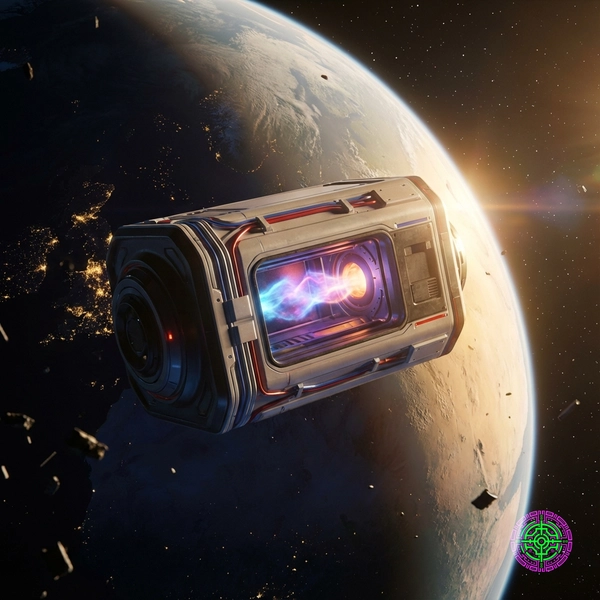

Enter Space-Based Data Centers (SBDCs). It sounds like science fiction, but for tech giants and ambitious startups, it’s becoming a serious business strategy. By moving compute infrastructure to orbit, we could tap into infinite solar energy and the natural cold of space, potentially solving AI’s environmental crisis in one fell swoop.

The Problem: Terrestrial Limits

To understand why we’d go to the trouble of launching servers on rockets, we have to look at the limitations down here on Earth:

- Energy Scarcity: Data centers currently consume about 1-2% of global electricity, a figure expected to double or triple by 2030.

- Cooling Costs: Keeping servers from overheating requires massive amounts of water or energy-intensive air conditioning.

- Land Use: “Hyperscale” facilities require hundreds of acres of land, often competing with residential or agricultural needs.

The Solution: Why Space?

Space offers two critical advantages that Earth cannot match:

1. 24/7 Solar Power

On Earth, solar power is intermittent—it doesn’t work at night or when it’s cloudy. In orbit, specifically in sun-synchronous orbits, a satellite can be bathed in sunlight nearly 24/7. There is no atmosphere to filter the light, making solar panels significantly more efficient.

2. Radiative Cooling

Space is cold. Very cold. Instead of pumping water to cool servers, space-based data centers can use radiative cooling. Heat generated by the chips is simply radiated out into the deep freeze of the cosmos. This eliminates the need for water entirely and removes the energy cost of active cooling systems.

Key Players and Innovations

Several companies are already racing to stake their claim in orbital compute.

Starcloud (NVIDIA Inception)

Starcloud, a member of NVIDIA’s Inception program, is one of the pioneers in this space. They are designing satellite constellations that function as orbital GPU clusters. Their approach focuses on:

- Green AI: Utilizing the clean energy of space to train models without the carbon footprint.

- Sovereignty: Providing data processing capabilities that aren’t tied to a specific country’s power grid.

Google’s Project Suncatcher

Google researchers have proposed a system called Project Suncatcher. Their research explores a scalable infrastructure using Tensor Processing Units (TPUs) in Low Earth Orbit (LEO).

- Optical Links: To handle the latency of sending data up and down, they propose using high-speed optical (laser) inter-satellite links, creating a mesh network in the sky.

- Prototype Goals: Google is targeting prototype launches as early as 2027.

Lumen Orbit

Another startup, Lumen Orbit, has made headlines with plans to build mega-constellations of data centers. They recently partnered with Ansys to simulate the thermal challenges of operating high-performance chips in a vacuum.

Technical Analysis: How It Works

Putting a server in space isn’t as simple as launching a rack from a standard data center.

- Radiation Hardening: Space is filled with cosmic radiation that can flip bits and destroy electronics. Hardware must be “hardened” or designed with redundancy to survive.

- Latency: The speed of light is fast, but distance matters. LEO (Low Earth Orbit) is close enough (500-2000km) to offer reasonable latency for training runs or batch processing, though it might be too slow for real-time gaming.

- Maintenance: You can’t send a technician to swap a failed hard drive. Systems must be autonomous and highly reliable.

Impact: What This Means for the Future

If successful, space-based data centers could decouple AI progress from environmental destruction.

- For the Planet: It moves the most energy-intensive part of the digital economy off-world.

- For AI Development: It removes the “energy ceiling,” allowing models to grow larger without crashing national power grids.

- For You: It ensures that the services you rely on—from LLMs to cloud storage—remain available and affordable even as demand spikes.

The Bottom Line

We are witnessing the birth of a new industry. Just as the cloud moved data from on-premise closets to massive server farms, the “Sky Cloud” promises to move it from Earth to orbit. While challenges like launch costs and radiation remain, the plummeting cost of access to space (thanks to SpaceX) is making this once-crazy idea economically viable.

The next great leap in AI won’t just be in software; it will be in where the hardware lives. And it looks like it’s going to be out of this world.

🦋 Discussion on Bluesky

Discuss on Bluesky