When Tim Cook announced “Apple Intelligence,” the pitch was simple: Privacy. Unlike OpenAI or Google, who want to suck your data into their massive server farms, Apple wants the AI to live on your phone. They want the “Brain” to sit in your pocket.

It sounds noble. It sounds secure. But there is a reason no one else has done it yet. It has nothing to do with code quality. It is entirely about Thermodynamics and Memory Bandwidth.

Apple is not fighting Google; they are fighting physics.

The Hook: The “token” Problem

Understanding why Siri is still dumb in 2025 requires looking at memory bandwidth. An LLM is just a giant file of weights (numbers). A 7-billion parameter model (int4 quantized) is about 4GB in size.

To generate one single word (token), the processor has to read that entire 4GB file from the RAM chips into the Compute Units. To generate a sentence of 20 words? It has to read that 4GB file 20 times.

Deep Dive: The Memory Wall

This is the “Memory Bandwidth Bottleneck.”

- The Problem: Your phone’s CPU is fast (3 GHz). But your phone’s memory (LPDDR5X) is a slow straw.

- The Math: An iPhone 16 Pro has a memory bandwidth of roughly 50 GB/s.

- The Reality: If you have to move a 4GB model 20 times a second (to get human reading speed), you need 80 GB/s of bandwidth just for the AI.

That leaves zero bandwidth for the rest of the phone. This leads to “UI Jank.” If the AI is talking, your scrolling stutters. The phone feels broken.

The Thermal Envelope: 5 Watts vs. 500 Watts

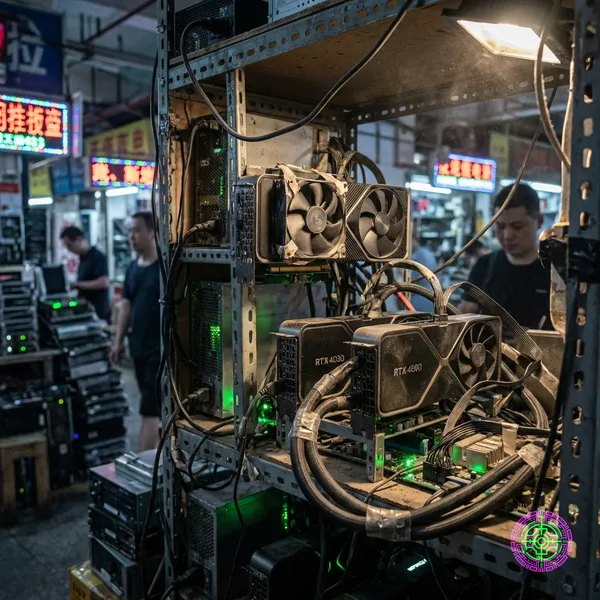

A Nvidia H100 GPU in a data center draws 700 Watts. It has massive liquid cooling to stop it from melting. An iPhone has a total thermal budget of about 5 Watts. If it goes higher, the battery drains in 45 minutes and the case gets too hot to hold.

Apple’s engineers are trying to squeeze a 700-watt algorithm into a 5-watt envelope. They are doing this through extreme quantization (making the model dumber to make it smaller) and “Speculative Decoding” (guessing 3 words ahead).

But the heat doesn’t lie. Early tests of iOS 19 show that heavy AI usage dims the screen brightness after 3 minutes. That is thermal throttling protecting the battery.

Contextual History: The RAM Stinginess Comes Back to Bite

For years, Apple was stingy with RAM. An iPhone would have 6GB while a Samsung Galaxy had 12GB. Apple argued, “The OS is efficient! It doesn’t need more RAM.” They were right:for apps. They were wrong:for AI.

Because the AI model has to stay “Hot” (loaded in RAM) to be responsive, that 4GB model eats 50% of the phone’s memory.

- The Result: When you ask Siri a question, the phone has to “kill” Instagram and Safari to make room for Siri’s brain.

- The User Experience: You go back to Safari, and the page reloads.

This is why the iPhone 18 is rumored to jump to 12GB or 16GB of RAM. It’s not for games. It’s to keep the AI brain alive without killing your other apps.

Forward-Looking Analysis: The “Private Cloud” Compromise

Apple knows on-device is losing the quality war. A 3B parameter model on a phone will never be as smart as a 1TB parameter model in the cloud (GPT-4).

That is why they built Private Cloud Compute (PCC). It is an admission of physics defeat. When your request is too hard for the phone (thermal/memory limits), it shoots it to an Apple Silicon server farm. Apple swears the data is deleted instantly. Users have to trust them.

But the future isn’t pure “On-Device.” It is hybrid.

- Tiny Brain (Phone): Handles “Turn on the lights,” “Read this text,” “Set a timer.”

- Big Brain (Cloud): Handles “Write an email to the boss,” “Summarize this PDF,” “Generate an image.”

The Bottom Line

The new Siri isn’t struggling because Apple’s engineers forgot how to code. It is struggling because the definition of “Computation” changed overnight from “Burst” (opening an app) to “Sustained” (generating tokens). Until battery chemistry or memory physics changes, the dream of a super-intelligent, totally private AI in your pocket will remain just that: a dream.

🦋 Discussion on Bluesky

Discuss on Bluesky